OpenStreetMap NextGen Benchmark 1 of 4: Static and unauthenticated requests

Posted by NorthCrab on 7 March 2024 in English.Today marks a milestone in the development of OpenStreetMap NextGen. After months of rigorous development, I conducted the 1st OpenStreetMap NextGen performance benchmark, a crucial step towards realizing the vision of a more robust, efficient, and user-friendly OpenStreetMap.

The focus of today’s benchmark was on evaluating static and unauthenticated requests. Since this core functionality is unlikely to change significantly during future development, it’s the perfect time to test it.

Future benchmarks will focus on timing authenticated requests as well as API 0.6.

What was measured

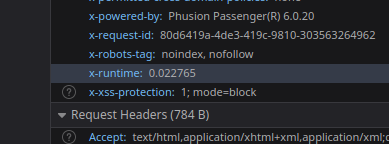

The benchmark analyzed request processing speed, excluding network and client latency. Both osm-ruby and osm-ng support the X-Runtime response header, which tracks how long it takes to process a request and generate a response.

Here’s a general breakdown of a typical static request processing:

- Grabbing configuration settings

- Checking for authorization (cookies, oauth, etc.)

- Configuring translations

- Rendering the HTML template

The setup

The benchmarking setup consisted of local machine testing, as well as official production and development websites.

I initially planned to run the benchmark solely on my local machine, following the official Docker instructions. However, I quickly discovered that the production deployment instructions were outdated and required some Ruby knowledge to fix, which I lacked. In particular, the instructions for replacing the Rails server with Phusion Passenger had been redirected to a generic support page.

osm-ng was launched in production mode with all Python and Cython optimizations enabled. Since we were only dealing with static requests, both local Postgres databases remained empty.

The benchmarking script

I created a basic HTTP benchmarking script that first warmed things up with a few requests before launching into the actual test. It then measured runtime times for a series of HTTP requests, and I repeated the benchmark multiple times for consistency.

A note before the results

It’s important to remember that OpenStreetMap NextGen processes static requests in a similar way to osm-ruby, and it does not (currently) introduce any new caching logic for templates, especially since that would significantly impact the benchmark results (and some people would consider it cheating). osm-ng remains completely backwards compatible with the existing OpenStreetMap platform. Additionally, it’s important to emphasize that the X-Runtime header used for benchmarking is agnostic to network latency, meaning it only measures the processing time on the server itself.

And the winner is…

Here’s a detailed breakdown of the results:

| Environment | Minimum Runtime (s) | Median Runtime (s) |

|---|---|---|

| Ruby (local) | 0.04264 | 0.04521 |

| Ruby (official) | 0.01892 | 0.02921 |

| Ruby (test) | 0.00913 | 0.01725 |

| Python | 0.00314 | 0.00325 |

As you can see, osm-ng consistently outperformed osm-ruby in all test scenarios. The fastest Ruby deployment had the minimum runtime of 0.00913 seconds, while osm-ng achieved the blazing-fast time of 0.00314 seconds, a remarkable 290% performance improvement.

Support the NextGen revolution

I’m truly convinced that OpenStreetMap NextGen will be a game-changer for OpenStreetMap, not just in terms of performance, but also in privacy, security, usability, and overall openness.

If you believe in this project as much as I do, please consider donating so I can keep working on it full-time! 🙏 Every contribution helps push us closer to that first stable release.

And a huge thank you to those who have already supported me!

Useful links

Today, we benchmarked not just a system, but the future. And the future is bright.

Discussion

Comment from MxxCon on 7 March 2024 at 22:20

What about 95th/99th % numbers? What about memory utilization during the benchmarks?

Comment from NorthCrab on 8 March 2024 at 04:30

Hey! I believe the p95/99 during synthetic benchmarks would be at best non-informative and at worst misleading. Those numbers are easily impacted by background load and are best if measured during real usage. OpenStreetMap-NG will measure those numbers after deployment on real requests (it includes optional Sentry tracing integration). You will need to wait a little bit more to see them. I don’t know how to get those numbers out of osm-ruby though. As for memory utilization, I agree that it would be nice to see it, but for an average map user, it doesn’t matter. And because of that, it’s not currently on my list of priorities.

Comment from MxxCon on 8 March 2024 at 04:53

95th/99th % request times are just as (non-)informative as any other performance numbers of synthetic benchmarks.🤷♂️

Well, if you are claiming 290% performance improvements, but it is achieved only at the cost of 100x more memory utilization, such a system might not be scalable to production level traffic.

Comment from NorthCrab on 8 March 2024 at 05:03

“(…) as any other performance numbers of synthetic benchmarks”, not really. Small p values are least impacted by external factors, meaning that if you want to measure absolute CPU performance they would be the most reliable. Big p values are most impacted by external factors, meaning that you would want to use them to measure overall system performance. Because of that, they are mostly meaningful when measured in actual deployment scenario with real requests. It’s very difficult (if possible) to mimic actual system usage during synthetic benchmarks.

Comment from o_andras on 8 March 2024 at 12:28

What’s Ruby local/official/test?

Comment from mmd on 9 March 2024 at 21:30

First of all, I find it difficult to make some sense of these figures, since measurements were done on different boxes with fairly different hardware specs. For “Ruby official” and “Ruby test” one can refer to https://hardware.openstreetmap.org/ : spike-0[6-8] are the production osm.org frontend servers, faffy is the development/test server, all of which are running openstreetmap-website as a Rails application. “Python” measurements were presumably performed on a private box with unknown hardware specs.

Dockerfiles are mainly aimed at local development, and are not suitable for performance measurements. They’re starting up a Rails application in development mode, rather than production mode. It is expected that runtimes in development mode are much higher. I would assume that “Ruby (local)” runtimes were collected in this mode.

As an outlook: I expect to see some nice speedups for these types of micro-benchmarks when using Ruby 3.3.0 with YJIT enabled (currently not yet enabled on osm.org servers). To get an idea about the relative speed difference, I did a very quick test run on my laptop:

Without YJIT:

With YJIT enabled:

Local server started as:

RUBY_YJIT_ENABLE="1" RAILS_ENV=production bundle exec rails sComment from Woazboat on 18 March 2024 at 12:33

Could you please include some more information about how you set up the services and how these benchmarks can be reproduced by others?

For future benchmarks, information about resource utilization (CPU/memory) would also be useful, otherwise we’re only seeing half the story.

A faster response times for static pages is nice, but is ultimately only going to have a negligible impact. Some more benchmarks for actual OSM API calls with realistic data would be useful.

Comment from NorthCrab on 18 March 2024 at 12:51

@o_andras They are different deployment environments. Local is the same environment as the NextGen code has been tested in (local machine). I included other deployments for extra comparison because the current osm-website deployment documentation seems to be outdated and I did not want to make the benchmark so one-sided.

@mmd Isn’t production also using docker images? I followed the project’s deployment documentation so I assume that’s the issue (that it differs from the actual deployment). I am looking forward to revisiting the benchmarks once details like that are sorted out. That’s also the reason why I started with such simple benchmark first, to test out the waters. I am only planning on benchmarking applications as deployed using official instructions.

@Woazboat Those are really good suggestion! I will apply them during my work on the 2nd benchmark some time soon. The full set of benchmarks will consist of 4 tests with increasing level of complexity. This first test focused just on static and unauthenticated requests as this part of the NextGen codebase is unlikely to be changed.

Comment from mmd on 18 March 2024 at 14:46

The openstreetmap-website project has no dependency on Docker, and it’s also not used in production. Someone contributed a Dockerfile a while ago with the idea to facilitate local development. It is completely optional, meaning you can easily set up your local development (or production environment) without Docker. By the way, I don’t use Docker for my local Rails set up either, like a good part of the other contributors.

The Rails app on osm.org production is managed through the Chef repository, which you can find here: https://github.com/openstreetmap/chef/tree/master/cookbooks/web

It includes all the steps to set up a production environment. I still find it easy enough to read and go through. The number of configuration settings may seem a bit daunting at first, so plan some time and don’t be afraid to ask questions if something is not clear to you. I do this all the time.

Regarding performance testing, I would assume that

RAILS_ENV=productionwould be a good starting point for measurement with puma (the default webserver). I also compared puma with Phusion Passenger and found runtime differences to be negligible. So let’s try to keep it simple and check first that you local rails server is using the right settings.Comment from fititnt on 19 March 2024 at 00:02

There’s at least one flaw in the methodology: the benchmark is mostly measuring docker overhead, not the ruby code in production.

In cases such as fast running operations, this overhead becomes significant.

But I can understand this might be the first time you are doing such type of benchmarks, so it’s okay make this mistake.

Comment from mmd on 19 March 2024 at 11:36

I cannot confirm this. When running the Rails server in development mode, you will notice a similar increased runtime even without Docker in place. This is expected behavior as mentioned before. Rails developer mode is not suitable for performance testing.

Comment from fititnt on 19 March 2024 at 18:51

Hummm. So the benchmark is running both inside Docker and development mode.

One reason to me feel strange such difference is because in general the same algorithm would give similar performance across programming languages which are similar (e.g. interpreted vs interpreted), so unless any alternative is doing more work, a ruby vs python using more recent versions likely would have similar results. So I assumed it would be docker.

By the way, this benchmark is doing something too simple (static page). As soon as it start to work with real data, a heavy part of the work will be from the database (which I assume will be the same for ruby and python), which means any performance difference is more likely to be smaller. And, if not smaller, it might be easy to optimize the queries in the rails port.

Comment from Woazboat on 19 March 2024 at 20:28

That is not true. There can be massive differences in speed between programming languages even if they are all interpreted/compiled and the algorithm is exactly the same (which is not the case here, web frameworks are unlikely to be exactly identical and have different features, etc…).

Docker is also unlikely to introduce any significant performance overhead.

Comment from fititnt on 20 March 2024 at 00:57

Yep. This part is relevant. Simplistic benchmarks such as output static content can vary more between frameworks of the same language than if you get a typical framework between two languages (and make a reasonable effort to optimize how to run in production). Both Django (Python) and rails-api (Ruby) as example, are both the more popular between developers, but on benchmark are the slowest:

The typical production use of web applications always has some kind of web framework (even if minimalistic) in special if it is interpreted (which is the case for python and ruby, but may be less C, Go, Rust, etc). And one reason for this is because the alternative to not use any framework means not only the developer, but contributors also have knowledge of how to safely implement things such as authentication, session management, tokens, etc etc etc. It also means to document (and keep up to date) the code conventions on how to organise the code.

I tried to find comparisons between the two frameworks and the difference is small (maybe there’s too few comparisons, however the ones I found, the Ruby on Rails a bit faster than Django on the over simplistic tests). So, I think the idea of assuming a full application written in modern python necessarily is faster than modern ruby may not be significant at all the more features are added. While the current version of his code is not using Django, even if we use as baseline any python framework with more performance, the more features he adds, the less prominent the difference will be.

Trivia: at least on https://benchmarksgame-team.pages.debian.net/benchmarksgame/fastest/ruby-python3.html (even without web framework, access to database, etc) part of ruby algorithm (using ruby 3.2.0 +YJIT) implementations are more efficient than the python equivalent.