Many OSM validators will report an error if a waterway crosses a highway without some additional tags or structure at the intersection, such as a bridge, culvert, or ford. That makes a lot of sense if you’re working in an area where the roads and waterways are already well mapped and where the waterways are actually wet. Those assumptions might work well in Europe, for example. They don’t work well in the Southwest United States.

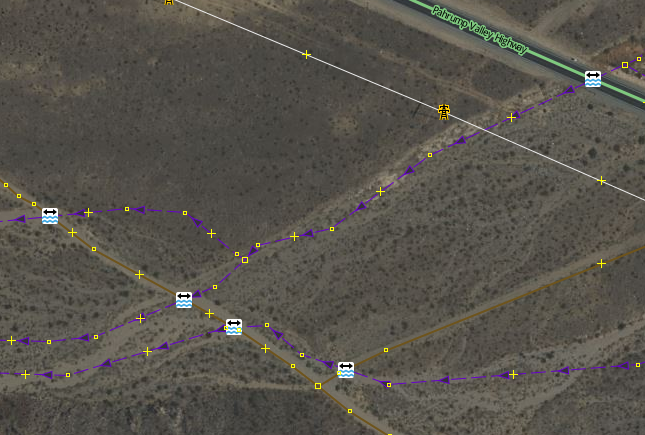

Of the possible resolutions, adding a node with ford=yes is the easiest, so many mappers will do this to satisfy the validator – sometimes without looking closely at the situation. Then you get results like this, where the ford=yes nodes are meaningless.

If you get a warning from a validator that a waterway and highway intersect and need additional tagging, there are some things to consider first: