Some months ago, I was looking around OSM to find where the bulk of noise and inefficiency is. I’m aware of some other efforts (like Toby in 2013) but I actually went so far as to write a C++ app on Osmium which parses PBF extracts, simulates running line simplification, and produces a list of the ways which are the least efficient.

I ran this on various US states and countries worldwide, and the winner is… North Carolina. It is so wildly inefficient that we may as well not bother with the rest of the world until we’ve cleaned up North Carolina first. (Just for comparison, the size of the output: Finland 17k, Colombia 20k, Colorado 30k, England 45k, North Carolina >300k).

Why is North Carolina (henceforce NC) so obese? There are a handful of bad spots elsewhere (like some of the Corine landuse in Europe, and a waterway import in Cantabria, Spain) but nothing close to NC. It’s due almost entirely to a single import in 2009. The USA’s hydrography, NHD is a truly massive dataset. An account called “jumbanho” imported NHD for NC and apparently applied almost no cleanup (beside a small pass at removing duplicate nodes a few months later). Among the many flaws of that import:

- Topology is mostly missing (features meet but don’t share a node)

- Really out of date (shows swamps that were drained decades ago, streams running through what are now shopping malls).

- Almost all of it is barely or not at all decimated (a stream which is perfectly modeled in 15 nodes is sometimes made of 300 nodes).

As a result, the jumbanho account has noderank #3 with 43 Mnodes (this was rank #2 with 49 Mnodes, but as I’ll explain, I’ve been busy).

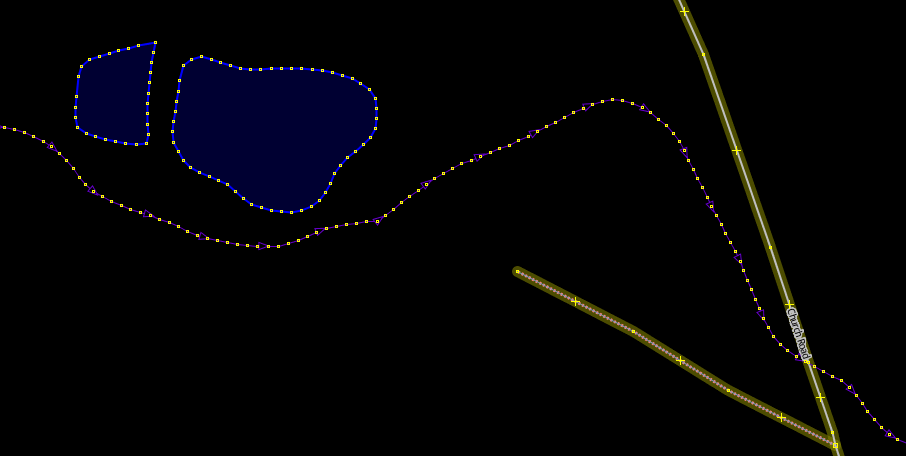

This is what the data looks like:

As you can see, a regular set of evenly-spaced nodes, with no decimation. This is worse when you consider that the overall accuracy is far less: here the pond is 7m off, the stream is variously 12, 14, or 32m off:

This inefficiency is bad in a few ways, such as making the planet file balloon with dead weight. But a more relevant issue is this: When a user comes in here to fix the alignment of the data, there is NO WAY they can be expected to move all 200 points by hand. An import with too many points is highly resistant to EVER getting manually fixed. The solution is to simplify first, but by how much? Here we encounter some issues:

- The simplify tool in JOSM defaults to 5 (!) meters which is brutal and useless for just about any use I can think of (maybe very, very rough old GPS traces?)

- JOSM lets you change the amount, but it is buried deep in the “advanced preferences.”

- Once you find that, knowing how much to simplify each kind of feature is a matter of experience and skill.

After hundreds of hours of manual work on NC, I have learned what values work; general guidelines which I carefully tweak based on each area:

- natural=wetland. These are very rough, 1.0-1.2 m.

- waterway=stream, waterway=riverbank, natural=water. They are more delicate, I use 50-80 cm.

- Streams and rivers which are either inside wetlands, or “artificial paths”, these are often notional and don’t correspond closely to any real feature, so >1 m.

Note that these levels of precision are WAY less than the actual inaccuracy of the data; they cannot harm the value in the data, because they are too small. In fact they could be bigger, but the goal is to leave enough nodes so that human editing won’t have to add or remove many nodes when they align the feature to its correct location.

While I would be happy to just write a bot to do that first step, that would be a “mechanical edit” and I’d have to put up with mailing list arguments to get permission. (I’d also have to write that bot, which I’ve been too lazy to do so far). So instead, I’ve put in the time to do it all manually in JOSM, with steps like:

- Study each area, compare the features to the imagery.

- Do some super careful simplify with appropriate values. (It gets really tiring having to dig into JOSM’s advanced preferences every single time I change the value.)

- Fix the topology by carefully tuning the validator’s precision and allowing it to auto-fix, with manual verification.

- Some manual adding of bridges and culverts.

- Removing/updating non-existent wetlands and streams (one common clue: they intersect buildings).

- Splitting some ways and creating relations, for example for a large riverbank and wetland that share an edge.

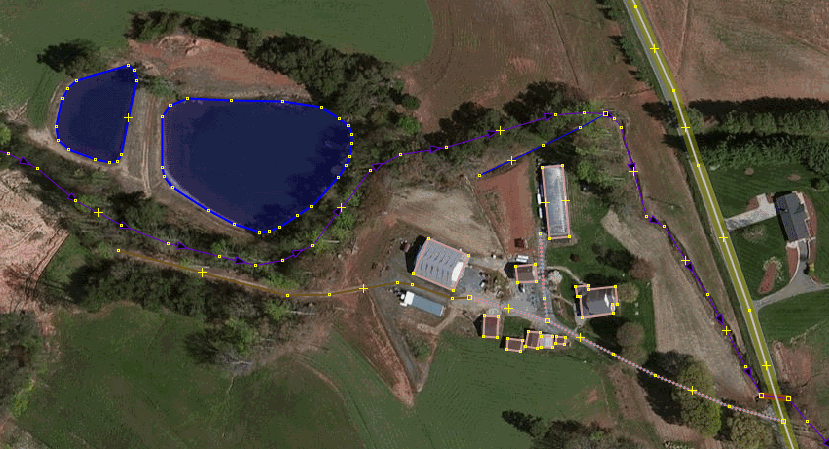

Here is that same area after a simplify to 70cm on the NHD features, then quick manual alignment:

It’s exhausting. In fact, a bot wouldn’t really help that much, since the simplify is only the first step, the topology and the rest still need to be done by a human anyway.

By my rough calculation, if I work hard for 5 hours every night, It would take around 5 months for me to finish cleaning up NC NHD to a decent level.

On the plus side, other NHD imports I’ve seen around the USA (like Oklahoma) don’t seem to be nearly as bad; while they suffer from most of the same quality issues, at least they were already simplified before uploading.

Discussion

Comment from imagico on 30 November 2015 at 09:32

One problem of NHD as well as other waterbody imports is that river/stream classification is often either off or completely missing and this is often very difficult to assess properly from imagery alone.

Your observations re. accuracy comply with what i experienced with NHD data. This is very variable, both in terms of positional accuracy and age of the data. In some areas NHD data is clearly very old (probably 1950s-1960s).

With your ‘data efficiency analysis’ - make sure when you apply this worldwide you take into account projection distortion, otherwise you probably end up with very wrong results at high latitudes.

JOSM could by default offer a scale independent simplification (using the node density along the line to set the simplification threshold).

Comment from SK53 on 30 November 2015 at 10:13

This is a really useful discussion.

I remember trying to tidy up bits of this data around Wrightsville Beach and giving up. It didn’t help that my memory of what was there is rather fuzzy, but it was quite clear that some wetland features just shouldn’t have been there. I suspect most of my cleanup was just node de-duplication. Certainly I did enough of that sort of thing to write-up my process. I think EdLoach used this idea tidying up Georgia landuse until someone objected.

Looks like I need to run over the Colorado Basin data too, also complicated by intermittent watercourses.

Lastly, I’d not come across the term “decimation” applied in this way before: obviously it’s a GIS term. Not all of us have that background, although its fairly understandable after the second or third reading.

Comment from bdiscoe on 30 November 2015 at 18:32

@imagico you wrote about “projection distortion” and “scale independent”. AFAICT, JOSM already does this correctly. In fact while I was writing my Osmium-based simplification test code, I spent hours looking at the (many!) ways to approximate displacements, but finally just adapted the code directly from JOSM itself (SimplifyWayAction.java) translating from Java to C++. (See all the geodetic stuff in there about EARTH_RAD and “Aviaton Formulary v1.3”)

As for river/stream classification, among all of NHD’s many issues, I haven’t seen much trouble with that (the classification usually roughly matches the imagery, at least), but I have seen some NHD in western Colorado where all the ditches/drains came in as “canal”, that was clearly wrong.

@SK53 I apologize for “decimation” as jargon, not so much from GIS, more from computer graphics (my background). At least I managed to avoid calling them LODs :)

Comment from imagico on 30 November 2015 at 21:03

Yes, JOSM does it correctly, if you do it like JOSM you should be fine.

Comment from Alan Bragg on 1 December 2015 at 03:52

Very interesting. Thanks for posting this, I’ve learned a lot and hope to put it to good use as I work with NHD ways in Massachusetts.

Comment from gormo on 4 December 2015 at 21:09

I got stuck in Greenville, SC after being led there by Maproulette, and have also seen NHD data that is really way off. Running through big industrial areas etc. But I am very reluctant to delete those, as I don’t know whether the waterbodies have been put underground in culverts or removed completely.

So I align where I can, but I mainly leave alone the waterbodies I would classify as errors, because I dont have on the ground knowledge.

Should I place notes at those locations?

Comment from SK53 on 4 December 2015 at 21:14

@bdiscoe: no problems, its meaning was fairly obvious. As for canal/ditch in W US: this is a real mess. We also don’t have sensible ways of separating an irrigation channel which is a foot wide from massive 60-100 foot ones, and those from canals for boats. My impression is most of the CO ones I little more than cut & cover channels of a few inches/feet; but one cant see anything on aerial imagery & therefore tends to the (false) presumption that NHD data knows what its doing.

I played a bit with a random area in the Upper Colorado Basin, where the data isn’t quite as insane as in NC, but just a quick random edit stripped a few thousand nodes out of the imported NHD data.

Comment from bdiscoe on 7 December 2015 at 19:41

@gormo Thanks for pitching in on SC. Your cautious approach, to avoid deleting waterways is generally good OSM practice. That said, sometimes its very obvious that a pond/stream has been destroyed and replaced with a shopping center or golf course. I have no issue with removing/updating the clearly non-existent waterways; you could do that (or place notes).

@SK53 I feel very comfortable with the waterway tags. I understand the ditch/canal distinction the same as stream/river, only with artificial waterways. In either case, the crossover is around 3 meters of visible water / potential water area. Both ditch/canal and stream/river have some intermediate-sized ones that could go either way. I understand “drain” as a ditch which is so skinny that it has little or no visible/potentially visible water. Practically every artificial waterway in NHD is a ditch or drain; there are really very few canals, even ones with “Canal” in their name like the Denver “High Line Canal” which is definitely a ditch by OSM’s definition.

In other parts of the world (like Sudan and India) they have vast expanses of canal-sized irrigation waterways; I haven’t come across much of that in the USA at all.

Comment from Sunfishtommy on 14 April 2016 at 05:09

I work in NC a lot, I never realized it was so bloated here, I just thought that was the norm. I had noticed that the water features in NC did tend to have a lot of extraneous nodes. In the raleigh area he has also done some building imports that also have many extraneous nodes(a square house with 6 nodes instead of just 4). That said though, he has contributed to the map tremendously.

Comment from Sunfishtommy on 14 April 2016 at 05:17

I am going to start simplifying ways in NC when I see them from now on. Especially since i work there a lot.

Comment from AlaskaDave on 17 October 2017 at 23:03

I also do a bit of work in NC (Kernersville area), and had often wondered about the awful hydrology data. Thanks for this very illuminating post. I could really use the JOSM simplify tool as you have enhanced it for my work in Alaska and elsewhere. I’ve sent a private email with questions about the tool’s advanced settings in JOSM.