I’ve been looking at how many tiles are changed when updating OSM data in order to better guide resource estimations, and have completed some benchmarks. This is the technical post with details, I’ll be doing a high-level post later.

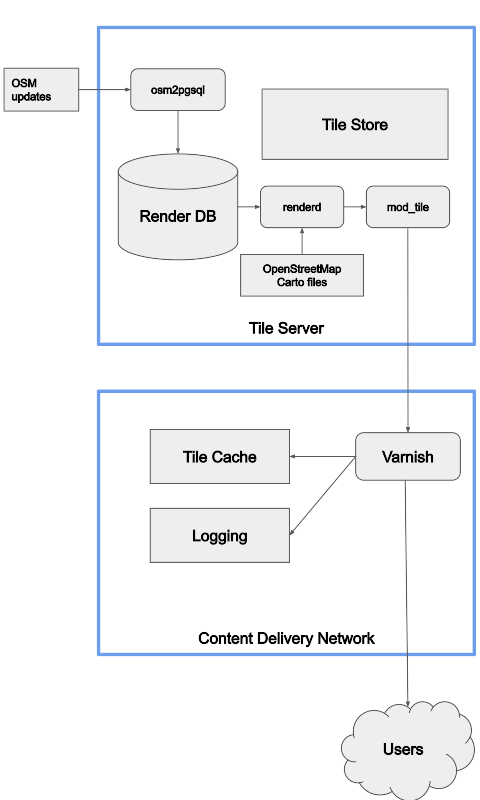

Software like Tilemaker and Planetiler is great for generating a complete set of tiles, updated about once a day, but they can’t handle minutely updates. Most users are fine with daily or slower updates, but OSM.org users are different, and minutely updates are critical for them. All the current minutely ways to generate map tiles involve loading the changes and regenerating tiles when data in them may have changed. I used osm2pgsql, the standard way to load OSM data for rendering, but the results should be applicable to other ways including different schemas.

Using the Shortbread schemea from osm2pgsql-themepark I loaded the data with osm2pgsql and ran updates. osm2pgsql can output a list of changed tiles (“expired tiles”) and I did this for zoom 1 to 14 for each update. Because I was running this on real data sometimes an update took longer than 60 seconds to process if it was particularly large, and in this case the next run would combine multiple updates from OSM. Combining multiple updates reduces how much work the server has to do at the cost of less frequent updates, and this has been well documented since 2012, but no one has looked at the impact from combining tiles.

To do this testing I was using a Hezner server with 2x1TB NVMe drives in RAID0, 64GB of RAM, and an Intel i7-8700 @ 3.2 GHz. Osm2pgsql 1.10 was used, the latest version at the time. The version of themepark was equivalent to the latest version

The updates were run for a week from 2023-12-30T08:24:00Z to 2024-01-06T20:31:45Z. There were some interruptions in the updates, but I did an update without expiring tiles after the interruptions so they wouldn’t impact the results.

To run the updates I used a simple shell script

#!/bin/bash

set -e

while :

do

SEQUENCE=$(osm2pgsql-replication status -d shortbread --json | jq '.local.sequence')

osm2pgsql-replication update -d shortbread --once -- --expire-tiles=1-14 -o "expire_files/$SEQUENCE.txt"

sleep 60

done

Normally I’d set up a systemd service and timer as described in the manual, but this setup was an unusual test where I didn’t want it to automatically restart.

I then used grep to count the number by zoom in each file, creating lists for each zoom.

for z in `seq 1 14`; do

find "$@" -type f -exec grep -Ech "^$z/" {} + >> $z.txt

done

This let me use a crude script to get percentiles and the mean, and assemble them into a CSV.

#!/usr/bin/env python3

import numpy

import sys

nums = numpy.fromfile(sys.argv[1], dtype=int, sep=' ')

mean = numpy.mean(nums)

percentiles = numpy.percentile(nums, [0, 1, 5, 25, 50, 75, 95, 99, 100])

numpy.set_printoptions(precision=2, suppress=True, floatmode='fixed')

print(str(mean) + ',' + ','.join([str(p) for p in percentiles]))

A look at the percentiles for zoom 14 immediately reveals some outliers, with a mean of 249 tiles, median of 113, p99 of 6854, and p100 of 101824. I was curious what was making this so large and found the p100 was with sequence number 5880335, which was also the largest diff. This diff was surrounded by normal sized diffs, so it wasn’t a lot of data. The data consumed would have been the diff 005/880/336

A bit of shell magic got me a list of changesets that did something other than add a node: osmium cat 005880336.osc.gz -f opl| egrep -v '^n[[:digit:]]+ v1' | cut -d ' ' -f 4 | sort | uniq | sed 's/c\(.*\)/\1/' Looking at the changesets with achavi, 145229319 stood out as taking some time to load. Two of the nodes modified were information boards that were part of the Belarus - Ukraine border and Belarus-Russia border. Thus, this changeset changed the Russia, Ukraine, and Belarus polygons. As these are large polygons, only the tiles along the edge were considered dirty, but this is still a lot of tiles!

After validating that the results make sense, I got the following means and percentiles, which may be useful to others.

Tiles per minute, with updates every minute

| zoom |

mean |

p0 |

p1 |

p5 |

p25 |

p50 |

p75 |

p95 |

p99 |

p100 |

| z1 |

3.3 |

1 |

2 |

2 |

3 |

3 |

4 |

4 |

4 |

4 |

| z2 |

5.1 |

1 |

2.6 |

3 |

4 |

5 |

6 |

7 |

7 |

10 |

| z3 |

9.1 |

1 |

4 |

5 |

8 |

9 |

11 |

13 |

15 |

24 |

| z4 |

12.8 |

1 |

5 |

7 |

10 |

12 |

15 |

20 |

24 |

52 |

| z5 |

17.1 |

1 |

5 |

8 |

13 |

17 |

20 |

28 |

35 |

114 |

| z6 |

21.7 |

1 |

6 |

9 |

15 |

21 |

26 |

37 |

48 |

262 |

| z7 |

25.6 |

1 |

6 |

9 |

17 |

24 |

31 |

46 |

63 |

591 |

| z8 |

29.2 |

1 |

6 |

9 |

17 |

26 |

34 |

55 |

92 |

1299 |

| z9 |

34.5 |

1 |

6 |

10 |

18 |

28 |

37 |

64 |

173 |

2699 |

| z10 |

44.6 |

1 |

7 |

10 |

20 |

31 |

41 |

80 |

330 |

5588 |

| z11 |

65.6 |

1 |

7 |

12 |

23 |

35 |

49 |

125 |

668 |

11639 |

| z12 |

111 |

1 |

8 |

14 |

29 |

44 |

64 |

238 |

1409 |

24506 |

| z13 |

215 |

1 |

10 |

18 |

40 |

64 |

102 |

527 |

3150 |

52824 |

| z14 |

468 |

1 |

14 |

27 |

66 |

113 |

199 |

1224 |

7306 |

119801 |

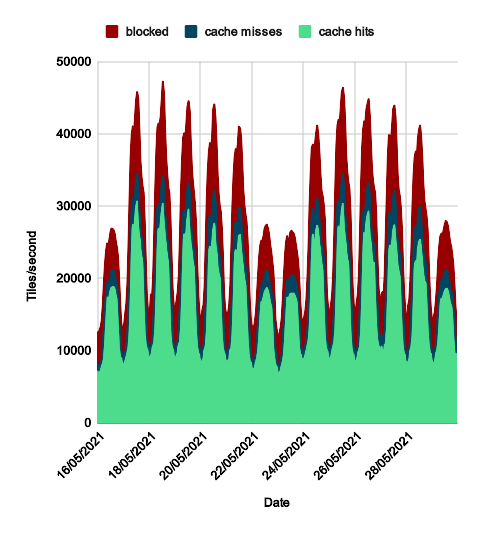

Based on historical OpenStreetMap Carto data the capacity of a rendering server is about 1 req/s per hardware thread. Current performance is slower, but includes The new OSMF general purpose servers are mid-range servers and have 80 threads, so should be able to render about 4800 tiles per second. This means that approximately 95% of the time the server will be able to complete re-rendering tiles within the 60 seconds between updates. A couple of times an hour it will be slower.

As mentioned earlier, when updates take over 60 seconds, multiple updates combine into one and reduce the amount of work to be done. I simulated this by merging every k files together. Contuining the theme of patched-together scripts I did this with a shell script, based on StackExchange

k=2

indir="expire_files_2/"

dir="expire_2_mod$k"

readarray -td $'\0' files < <(

for f in ./"$indir"/*.txt; do

if [[ -f "$f" ]]; then printf '%s\0' "$f"; fi

done |

sort -zV

)

rm -f ./"$dir"/joined-files*.txt

for i in "${!files[@]}"; do

n=$((i/k+1))

touch ./"$dir"/joined-files$n.txt

cat "${files[i]}" ./"$dir"/joined-files$n.txt | sort | uniq > ./"$dir"/joined-files$n.txt

done

Running the results through the same process for percentiles generates numbers in tiles per update - but updates are half as often, so in terms of work done per time, all the numbers need to be divided by k. For a few k, here’s the results.

k=2

| zoom |

mean |

p0 |

p1 |

p5 |

p25 |

p50 |

p75 |

p95 |

p99 |

p100 |

| z1 |

1.7 |

0.5 |

1 |

1 |

1.5 |

1.5 |

2 |

2 |

2 |

2 |

| z2 |

2.5 |

0.5 |

1 |

1.5 |

2 |

2.5 |

3 |

3.5 |

3.5 |

5 |

| z3 |

4.5 |

0.5 |

2 |

2.5 |

4 |

4.5 |

5.5 |

6.5 |

7.5 |

12 |

| z4 |

6.4 |

0.5 |

2.5 |

3.5 |

5 |

6 |

7.5 |

10 |

12.5 |

26 |

| z5 |

8.6 |

0.5 |

2.5 |

4 |

6.5 |

8.5 |

10 |

14 |

17.5 |

51 |

| z6 |

10.9 |

0.5 |

2.9 |

4.5 |

7.5 |

10.5 |

13 |

18.5 |

24.5 |

107 |

| z7 |

13.0 |

0.5 |

3 |

4.5 |

8.5 |

12 |

15.5 |

23 |

32 |

239 |

| z8 |

14.9 |

0.5 |

3 |

4.5 |

9 |

13 |

17 |

27 |

50 |

535 |

| z9 |

17.8 |

0.5 |

3 |

5 |

9.5 |

14 |

18.5 |

32 |

97 |

1127 |

| z10 |

24 |

0.5 |

3 |

5 |

10 |

15.5 |

20.5 |

41 |

192 |

2347 |

| z11 |

36 |

0.5 |

3.5 |

6 |

11.5 |

17.5 |

24 |

65 |

395 |

4888 |

| z12 |

64 |

0.5 |

4 |

7 |

14.5 |

22 |

32 |

120 |

844 |

10338 |

| z13 |

120 |

0.5 |

5 |

9 |

20 |

32 |

50 |

265 |

1786 |

22379 |

| z14 |

263 |

0.5 |

7 |

14 |

33 |

56 |

99 |

617 |

3988 |

50912 |

k=5

| zoom |

mean |

p0 |

p1 |

p5 |

p25 |

p50 |

p75 |

p95 |

p99 |

p100 |

| z1 |

0.66 |

0.20 |

0.40 |

0.40 |

0.60 |

0.60 |

0.80 |

0.80 |

0.80 |

0.80 |

| z2 |

1.01 |

0.20 |

0.40 |

0.60 |

0.80 |

1.00 |

1.20 |

1.40 |

1.40 |

2.00 |

| z3 |

1.82 |

0.20 |

0.80 |

1.00 |

1.60 |

1.80 |

2.20 |

2.60 |

3.00 |

4.60 |

| z4 |

2.54 |

0.20 |

1.00 |

1.40 |

2.00 |

2.40 |

3.00 |

4.00 |

4.80 |

8.00 |

| z5 |

3.40 |

0.20 |

1.00 |

1.60 |

2.60 |

3.40 |

4.00 |

5.40 |

7.00 |

18.80 |

| z6 |

4.31 |

0.20 |

1.02 |

1.80 |

3.20 |

4.20 |

5.20 |

7.40 |

9.80 |

42.60 |

| z7 |

5.08 |

0.20 |

1.20 |

1.80 |

3.40 |

4.80 |

6.20 |

9.20 |

12.60 |

93.60 |

| z8 |

5.78 |

0.20 |

1.20 |

1.80 |

3.40 |

5.20 |

6.80 |

11.00 |

18.93 |

206.20 |

| z9 |

6.78 |

0.20 |

1.20 |

2.00 |

3.60 |

5.60 |

7.40 |

13.00 |

35.40 |

430.40 |

| z10 |

8.73 |

0.20 |

1.40 |

2.00 |

4.00 |

6.20 |

8.20 |

16.40 |

67.48 |

895.20 |

| z11 |

12.76 |

0.20 |

1.40 |

2.40 |

4.60 |

7.00 |

9.60 |

25.16 |

150.32 |

1,865.40 |

| z12 |

21.60 |

0.40 |

1.60 |

2.80 |

5.80 |

8.80 |

12.80 |

47.00 |

328.89 |

3,932.40 |

| z13 |

41.88 |

0.40 |

2.00 |

3.60 |

8.00 |

12.80 |

20.60 |

102.08 |

712.36 |

8,486.80 |

| z14 |

91.76 |

0.40 |

2.80 |

5.40 |

13.00 |

22.80 |

40.40 |

239.88 |

1,597.66 |

19,274.40 |

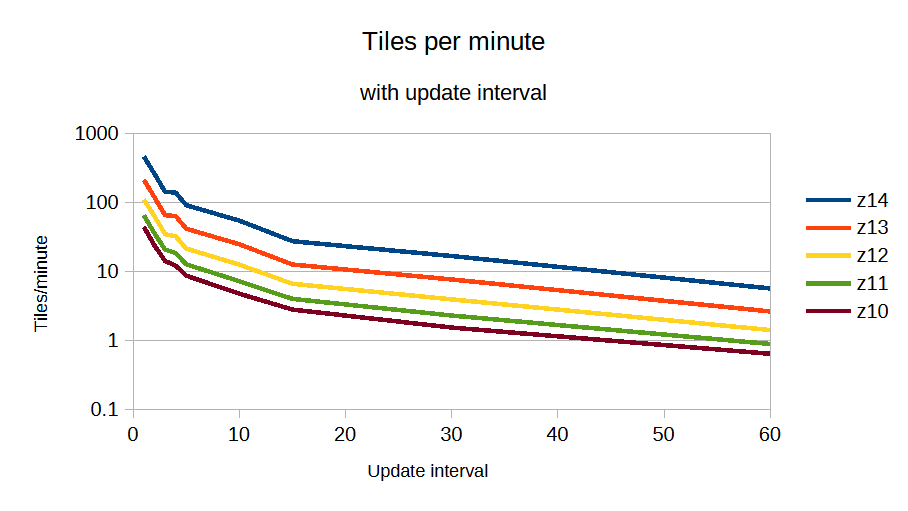

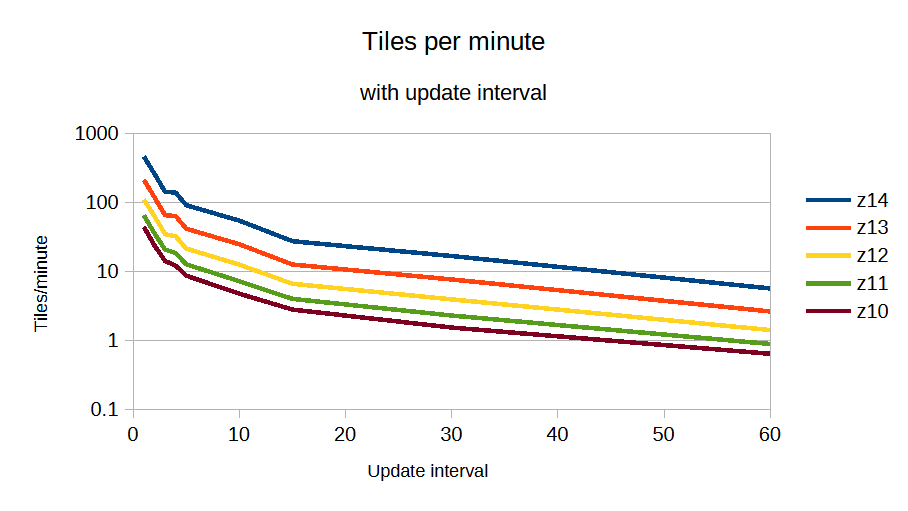

Finally, we can reproduce the Geofabrik graph, looking at tiles per minute with update interval and get approximately work ∝ update ^ -1.05, where update is the number of minutes between updates. This means combining multiple updates is very effective at reducing load.